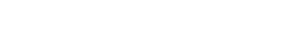

For most use cases, foundation models need a “human in the loop”. This means that human experts or users need to be involved in the process of developing, adapting and validating the models. As already described, the great leap in quality from GPT-3 to ChatGPT was achieved with reinforcement learning based on human feedback.

Since a “human in the loop” is currently necessary for most use cases, the question arises as to whether this process is even possible without humans.

Human in the loop” plays a particularly important role in model adaptation and validation. The best-known foundation models such as GPT or DeBERTa can be used in many areas on the basis of the training data used and must be adapted for specific use cases. Here, human experts usually have to help adapt the model to the user’s needs and validate it to ensure that it works correctly and reliably.